Hello,

I am building a server for one of my small business clients, it's a Dell PowerEdge 2950 with a Perc 5i RAID controller on board. This is an older server, but it is plenty powerful for what I need out of it. The idea is that this server will be used as an iSCSI target for an ESXi host. I don't know where the server initially came from, but when I got it from the client to build out, it only had 3 hard drives in it of various sizes, nothing big enough to be a storage server. I had a collection of old ST3450856SS hard drives from an old HP MSA2000 storage frame which were SAS drives of 450GB. I got the additional drive cages as required and mounted 6 of these old HP SAS disks into the server. The Perc 5i controller picked up on them no problem, and I was able to create a RAID5 set and off I went...however, I soon discovered some issues.

Now Perc 5i created the drive array no problem, it was accessible, but when I tried to run a CrystalDiskMark benchmarking tool on the server, the controller would drop out with Windows Event error 129, Reset to device, \Device\RaidPort1. The read portion of the software was fine, but it seems that as soon as it switched to Write tests, it would die, sometimes right away, at other times a few runs in. I started looking for a solution and updating everything, and one of the things that I wanted to update was the hard drive firmware. I looked up model number ST3450856SS + firmware on google and found that Dell also uses these drives for some of their servers, so I thought I will just flash the newest firmware from Dell into these and it will be happy...but this was not so easy...actually it was not easy at all.

Now, on this Dell server I installed the Dell Openmanage Server Administrator and it showed that the disks were detected as ST3450856SS with firmware version 0007, and that the latest firmware available for these types of disks from Dell was HS11.

I downloaded the Windows firmware update packages called SAS-Drive_Firmware_288PJ_WN32_HS11_A07.exe, assuming that I would be able to just run the program and it would update all the drives, however, this was not the case. The package does some validation to see if the drives you have will actually work with this firmware, and returned a message:

This update package is not compatible with your system configuration

Great, so I stared looking online to see what this is, and there isn't much information other then to ensure that you have the latest drivers and such from Dell, I do, and none of it worked.

I started working to see how I could circumvent the firmware process, and the first thing I did was to extract the firmware update package exe file with UniExtract, This gave me something like this:

Under the payload folder there was the actual firmware file, HS11.fwd. In the root was a file that seems like it would be responsible for the actual loading of the firmware called SASDUPIE.exe, so I did a /? on this executable under CMD and came up with the following path for the firmware update:

SASDUPIE.exe -u -s "%cd%\payload" -f -o update.xml -debug debug.log

When I ran the above command, it finished running very quick, must faster then would be required to upgrade the firmware on 6 SAS disks, so I looked at the debug.log file it create for some clues.

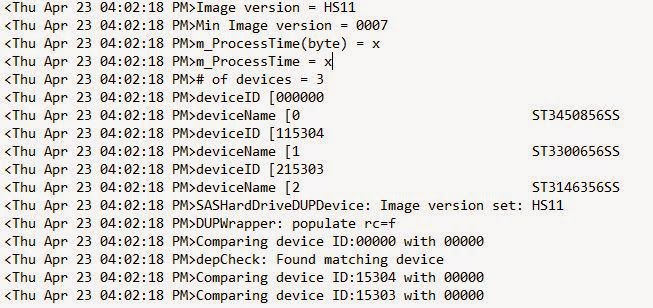

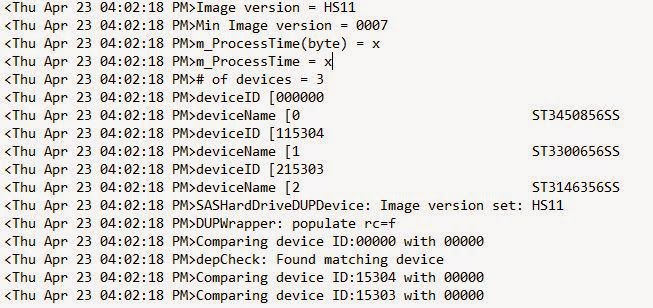

The first clue was the detection process and what it was looking for. The Seagate drives I have were being detected as Hardware ID of 00000, whereas the firmware was looking for 3 different Hardware IDs, corresponding to three different drive sizes this one hardware package could upgrade. So somehow, I had to trick the firmware into thinking that 00000 that it is detecting is actually the ST3450856SS hard drive. Here is the line in the debug.log that shows the detection process. I didn't get to capture the previous log, but here is what it looks like when it makes a successful detection, notice the 00000 being detected as the right model number:

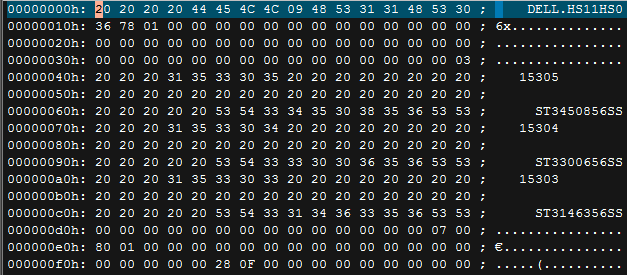

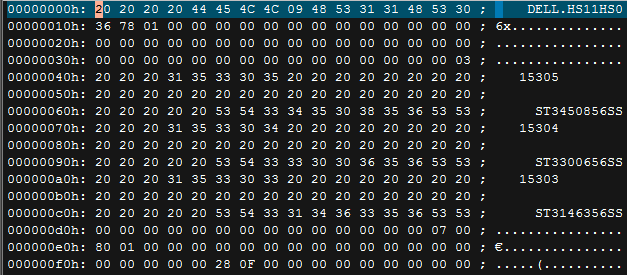

I used the HEX editor to open the HS11.fwd firmware file and look inside. During my research into the topic of flashing the firmware, I read that a Dell version of the firmware would carry a Dell specific header in the first 256bits of the file, and this header is all that differentiated the firmware file from a generic Seagate firmware release. When I opened the file in HEX editor, this is what I saw first at the top:

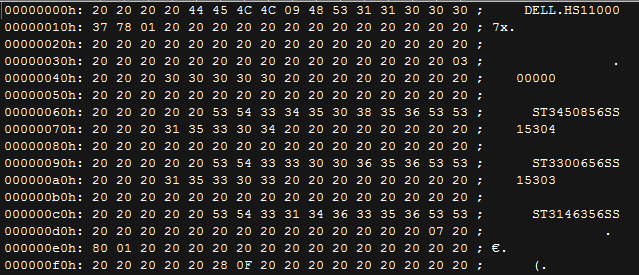

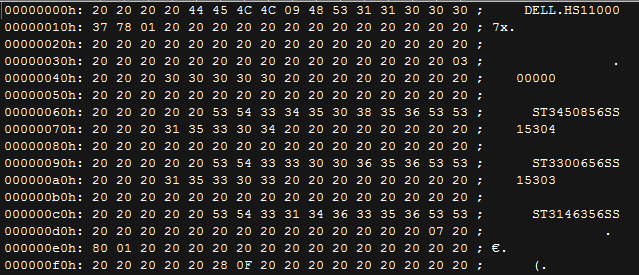

As you can see, there are three sets of numbers on the side, 15305, 15304 and 15303. Each of those numbers is what we saw earlier in the debug log, and these numbers are followed by the model number of the hard drive. For me, 15305 and the model number ST3450856SS is of importance, so I busted out the "Replace" feature in my Hex editor and replaced the text 15305 with 00000. I saved the edited file over the original and tried to run the SASDUPIE.exe command again as I did previously to look at the debug log. This time, I got a message that there is a version check error, and that to install HS11, you need to have HS06 first, but my drives were reporting the firmware level of 0007 (from HP), so I had to do more modifications with Hex. As yo can see in the previous image, right at the very first line, there is a line of DELL.HS11HS06, so I replaced HS06 with 0007 and saved the file again. This is what the file looked like after the changes:

Upon running SASDUPIE.exe command again, it took considerably longer to complete, however, despite now matching the model number and firmware revision, it still failed. I was sensing that I was getting close, and thought that perhaps I need to flash the older version of the firmware first, but it was the end of the day for me so I left it for the next day.

The following day I tracked down the HS0F firmware (F is higher than 6 in Hexadecimal, and HS06 is the minimum required, but I couldn't find that firmware easily) and opened it again in a Hex editor.

This time, I made the same changes as before to HS0F firmware, replaced HS06 with 0007 and 15305 model number with 00000. At this point, I think it would work if you were to save the file into the payload directory and run SASDUPIE.exe command again, however, I didn't try this, as I already moved on to a different firmware upgrade process, but in hindsight I think it would have worked right from windows.

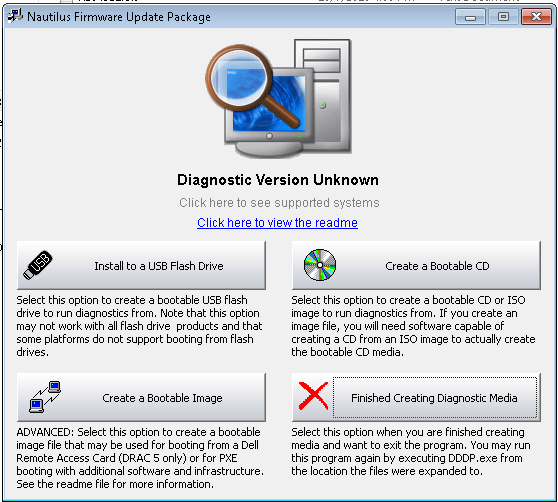

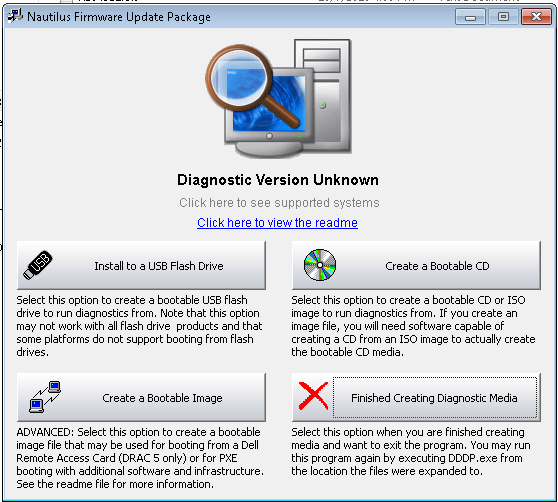

Dell makes a downloadable utility called the "

Dell SAS Hard Drive Firmware Utility" which can be installed in Windows, and then used to create a bootable USB drive to do firmware updates without windows. I ran the utility and it extracted the file contents to C:\Dell\Drivers\RG1GN\. If you navigate further to C:\Dell\Drivers\RG1GN\files\fw\sas\seagate\15K6 you will find all the firmware that is available for the particular Seagate drive. The files found here for HS0F and HS11 are the same files that you would have downloaded earlier, all in one place. I deleted all the other firmware versions and just left the HS0F.FWD file in there, the one I modified earlier to look for 0007 firmware. From this point, if you backtrack to C:\Dell\Drivers\RG1GN\ there is a utility called dddp.exe, run it and click on Install to a USB Flash drive, plug in a USB drive, and let it quickly build.

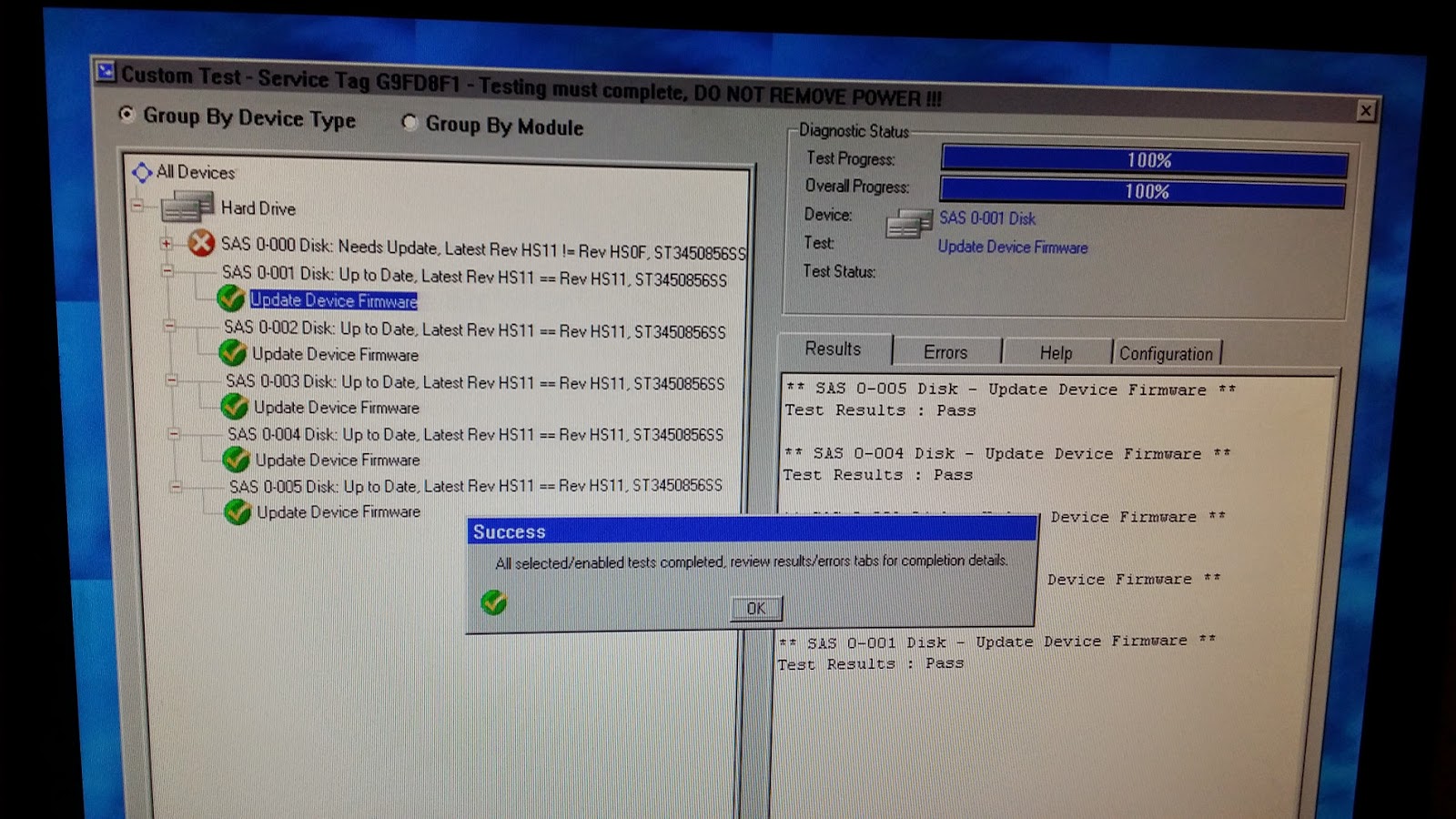

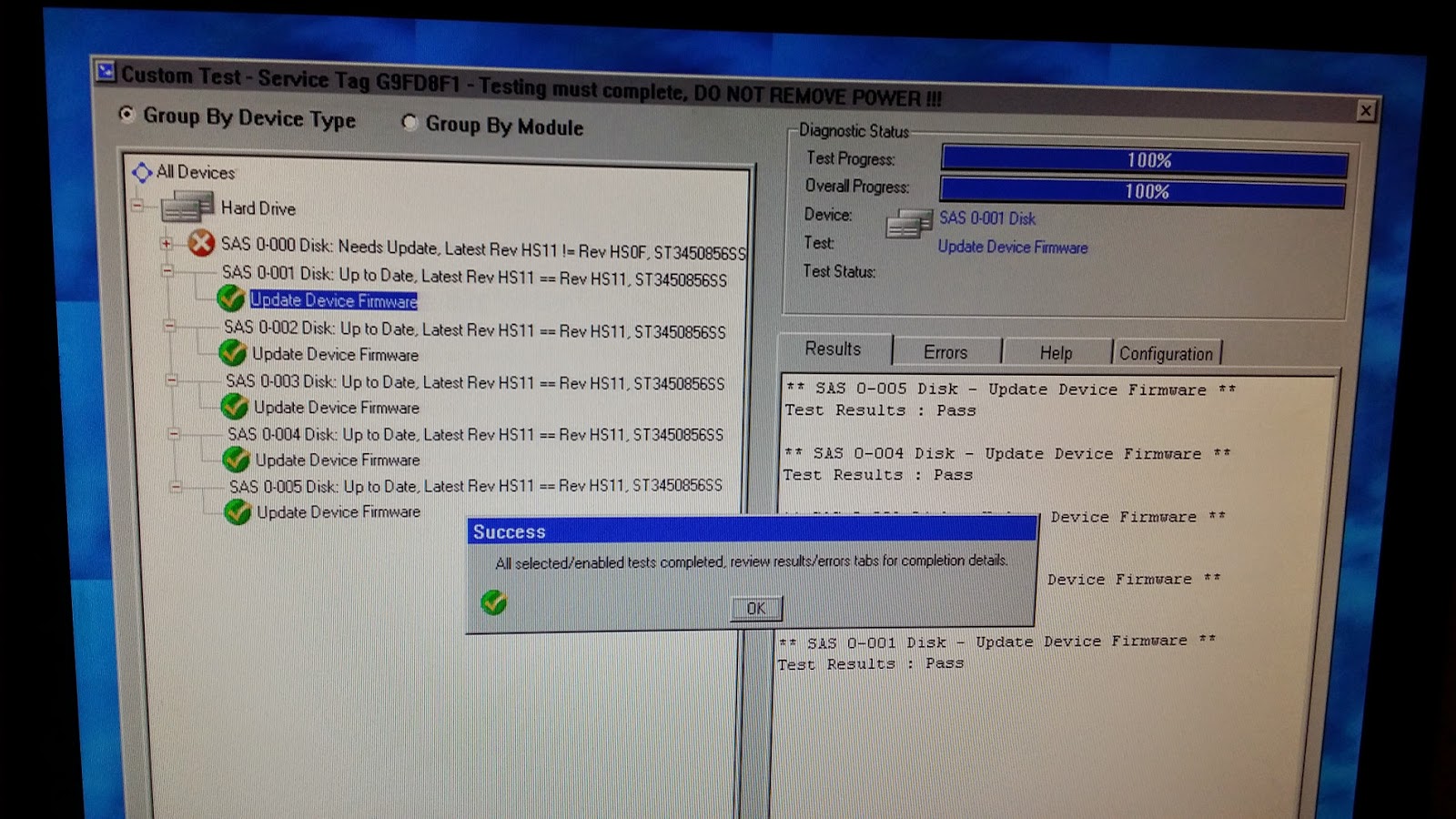

Once I booted off the USB into the interactive mode, it found my drives and said the firmware update was available. I clicked on Update on each drive (it locks your mouse, so don't freak out, takes about 5 min to read S.M.A.R.T. information twice, you can see the countdown at the top) and to my surprise, it went! I did this to all 6 disks! After this was all done, I went back into Windows, and put the previously edited HS11.fwh file in and recompiled the boot USB, however, it failed, but I knew why! 0007 version no longer exists on these drives, they are all now HS0F! Going back into the HEX editor, I changed the HS11.fwh file again and adjusted the header to it's original state of DELL.HS11HS06. I rebuilt the USB boot file again, and this time, success! All drives were updated to the latest from Dell, HS11! Woo hoo! Unfortunately, it didn't solve my Perc 5 errors in Windows, but at least I knew the drives were not the contributing factor, at least not on the firmware level.

I realize that this is very specific to one version of the drive, but I believe you can use this approach probably with most firmwares, and perhaps not only from Seagate, but I haven't tested that theory yet. As long as the firmware appears to have a Dell header, using Dell tools to update the firmware should be just a matter of making similar changes.